This blog posting is the second in a series hosted by ProPEL Matters to examine how big and small data is implicated in changes to professional practices and increasing datafication of work and professional learning.

There was a time when I didn’t worry too much about the ontology of data. When I worked in the field of nuclear structure physics, my data were 0s and 1s on hard disks, digital representations of electrical charges and currents generated in gamma-ray and particle detectors. We knew what was happening in the detectors to produce these currents, and we (or our firm- and soft-ware) knew how to interpret these traces into a language of gamma-ray energies and particle momenta.

In fact, the whole system was designed to make this translation. Our data had no independent existence; they were the result of a deliberate and highly-designed datafication of (un)natural processes. Data, then, were effects of (and confined within) networks of scientific practice.

But now something strange has happened to data. They seem to have cut loose, to have escaped from these confining networks of deliberate design, and acquired an independence that confers an aura of objectivity and inexorability. The world now seems to be permeated, even saturated, with measurements, metrics and traces. Every step in our online dance is logged and fed to algorithms that not only analyse the past in an attempt to predict the future, but also increasingly act to choreograph our movements.

In education, proponents of learning analytics hold out enticing visions of personalized learning, automated guidance and feedback, real-time and just-in-time learning opportunity generation (see, e.g., Greller & Drachsler, 2012). McGraw-Hill even go so far as to suggest that their Connect 2 Smartbook can ‘predict the exact moment the learner is about to forget something’ (McGraw-Hill 2017). All based on “learning data,” the digital traces left by students as they work in online environments.

There is a sense that as good professional educators and education researchers, we should be using such data: to improve learning experiences and outcomes, to make learning more efficient, to turn learning systems into smoothly functioning machines that process learners into more knowledgeable, skilful and successful members of society.

But at the same time, as educators and researchers we are at something of a loss. We find ourselves drifting to the edges, peripheral actors in the networks of online resources, student activities, algorithms, data scientists, business interests, internet infrastructure and so on that “learning data” are effects of.

We log into our Learning Management Systems (LMSs) and are presented with data that we don’t understand. The processes through which they are generated and manipulated are mostly black-boxed from us; and for many of us, it can seem like we have no choice but to take what we are given, since the black box not only contains a mystery, but also acts as an effective barrier, serving to reinforce the data’s independence both from us and, increasingly, from the real lives humans they are supposed to represent.

This, in turn, can make us wary of learning-data-driven practices and interventions, as we have neither sufficient understanding nor trust. In recent work (Thompson, 2017; Watson et al. 2016a; 2016b; Wilson et al. 2017a; 2017b), our research team (based at Stirling University) has tried to counteract this increasing alienation between educational researchers and learning data by developing some DIY (do-it-yourself) learning analytics. This is a process of deliberate datafication, starting from the most basic information easily available to us through our LMS’s reporting functions.

Our team tried to constantly connect and reconnect the quantitative trace data to the learners themselves through qualitative data more easily related to what educators and educational researchers would recognise as learning – discursive texts extracted from online forums and course submissions.

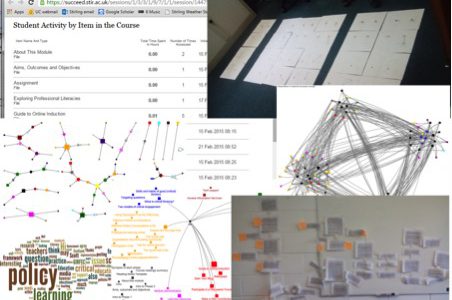

We took the digital trace data from an online Masters-level course and, using some of the processing and visualization tools in the NodeXL add-on to Excel (Hansen, Schneiderman & Smith 2012), we experimented with ways of searching for patterns and correlations in students’ interactions with online resources (Wilson et al. 2017b).

In this project, we also found ways to visualize the conversations occurring in the online discussion forums, both digitally remediating them and returning to the details of the text (Watson et al. 2016a).

And, as will be discussed in more detail in the next posting in this series by Cate Watson we combined digital traces and qualitative data to create a rich account of the journey through the module of a single individual (Watson et al. 2016b).

We do not wish to suggest that any of the analytics – digital or analogue – that we developed are ideal or perfect ways of measuring education and learning. Far from it. However, we hope that they will provoke some critical thinking and discussion about the generation and use of data in networks of educational practice.

We also hope others may be encouraged to reintegrate themselves more fully into these networks, re-creating deep connections with educational data of a variety of types. After all, we have a responsibility to both the students who take our courses and the decision-makers who design policy on the basis of data, to take the idea of “learning data” seriously.

References

Hansen, D., Shneiderman, B., & Smith, M. A. (2010). Analyzing social media networks with NodeXL: Insights from a connected world. Burlington, MA: Morgan Kaufmann.

Greller, W., and Drachsler, H. (2012). Translating learning into numbers: A generic framework for learning analytics. Journal of Educational Technology and Society, 15(3), 42-57.

McGraw-Hill (2017). Understanding our Adaptive Engine.

Thompson, T. L. (June, 2017). Digital data and professional practices: A posthuman exploration of new responsibilities and tensions. Paper to be presented at 3rd ProPEL International Conference (Linköping, Sweden).

Watson, C., Wilson, A., Drew, V., & Thompson, T. L. (2016a). Criticality and the exercise of politeness in online spaces for professional learning. The Internet and Higher Education, 31, 43-51.

Watson, C., Wilson, A., Drew, V., & Thompson, T. L. (2016b). Small data, online learning and assessment practices in higher education: a case study of failure?. Assessment & Evaluation in Higher Education, 1-16.

Wilson, A., Thompson, T. L., Watson, C., Drew, V., & Doyle, S. (2017a). Big data and learning analytics: Singular or plural? First Monday, 22(4).

Wilson, A., Watson, C., Thompson, T. L., Drew, V. and Doyle, S. (2017b). Learning analytics: challenges and limitations. Teaching in Higher Education.